Biography

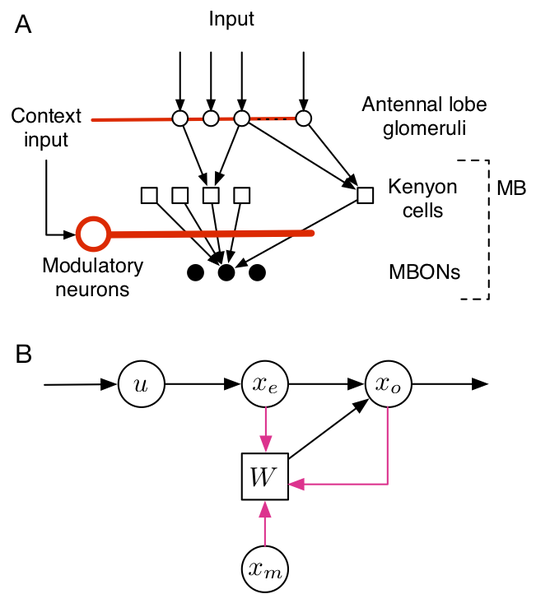

Sandeep Madireddy is a Computer Scientist in the Mathematics and Computer Science Division at Argonne National Laboratory. His research spans across the areas of probabilistic machine learning, bio-inspired and energy-efficient learning, high-performance computing and generative AI with an emphasis on safety and robustness. His research integrates algorithmic research in these areas with applied research aimed to advance scientific discovery in critical areas such as fusion energy sciences, cosmology and high-energy physics, weather and climate, and material science.

He previously was a postdoc and assistant computer scientist in Mathematics and Computer Science Division advised by Prasanna Balaprakash and Stefan Wild. Before joining Argonne, he obtained his Ph.D. in mechanical and materials engineering (focusing on Probabilistic machine Learning) from the University of Cincinnati, as part of the UC Simulation center (a Procter & Gamble Collaboration). Before that, he obtained his masters from Utah State University and bachelors from Birla Institute of Technology and Science (BITS-Pilani) in India.

Sandeep serves as a Co-investigator (and AI lead) for DOE and NSF-funded projects:

- AuroraGPT, Co-lead for Evaluation and AI-Safety Thrust

- RAPIDS2: A SciDAC Institute for Computer Science, Data, and Artificial Intelligence, Argonne AI Thrust lead

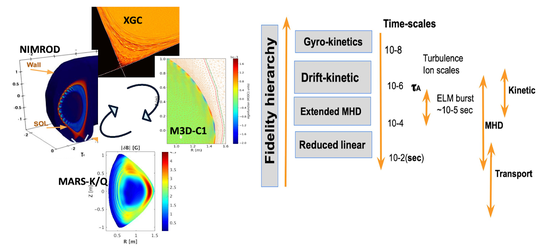

- EFIT-AI: ML/AI Assisted Tokamak Equilibrium Reconstruction, Co-investigator and AI Lead

- CETOP: A Center for Edge of Tokamak OPtimization, Co-investigator and AI Lead

- Probabilistic Machine Learning for Rapid Large-Scale and High-Rate Aerostructure Manufacturing (PRISM), Co-investigator and AI Lead

- Enabling Cosmic Discoveries in the Exascale Era, Co-Investigator

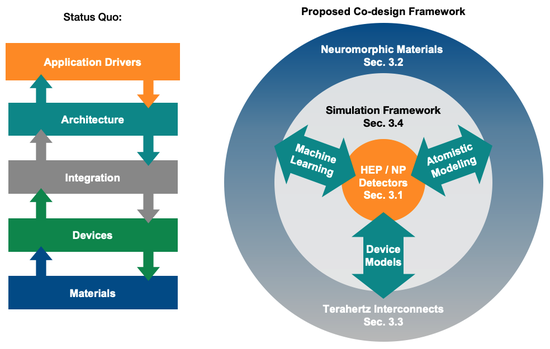

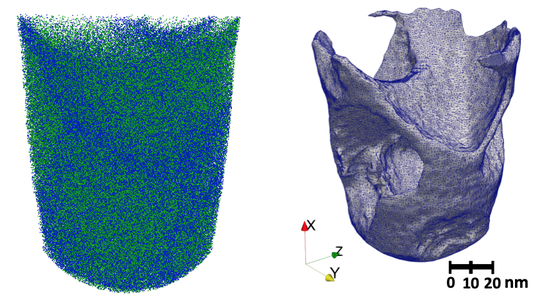

- A Transformative Co-Design Approach to Materials and Computer Architecture Research, Co-investigator

- Efficient Microelectronics, Co-investigator

- ScaleStuds: Foundations for Correctness Checkability and Performance Predictability of Systems at Scale, Co-investigator

Sandeep also provides professional services to various machine learning, high performance computing and domain science conferences and journals.

- PC member (HPC Conferences) (Super Computing 2023, CCGRID 2024, INFOCOMP 2019-23, HPCC 2017); Reviewer: IPDPS, IEEE Cluster

- Reviewer (AI Conferences): ICML (2021-24); ICLR (2021-24); NeurIPS (2021-24), AISTATS (23)

- Journals: ML/AI (Neural Networks, NeuroComputing, JMLMC, SIAM SISC); HPC (JPDC, TPDS, Parallel Computing, TCC, JoS); Domain Science: (MNRAS, CISE, IEEE TPSC, JoLT)